Americans have been watching television since the late 1940s. While the concept was introduced to U.S. audiences at the World's Fair in 1939, the war interrupted, and it wasn't until 1948 that the television model we know was popularized with the first hit show, Milton Berle's "Texaco Star Theater."

Since then, we've seen a lot of changes. For decades, if you wanted to watch a show you loved, it meant sitting in front of your television at the correct time. If you were interrupted, you just had to hope for a rerun to catch the parts you missed.

Advertisement

But after the rise of home video in the 1980s, many of us began taping the shows we loved in order to watch them on our time. We were no longer at the mercy of the TV listings, because we decided the time we'd watch our programming: We were shifting the time the show aired for our personal convenience.

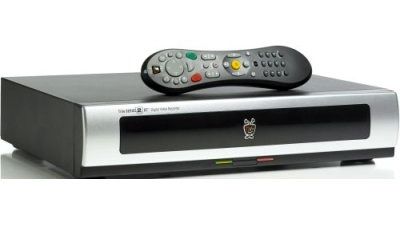

The first digital video recorders (DVRs), including TiVo and something called Replay, were introduced in 1999. DVRs made time shifting much easier and included a host of features we now use all the time: pausing live TV, skipping commercials and more. Not long after, cable companies began packaging their set-top boxes with hard drives so that you didn't have to purchase special equipment or separate DVR subscriptions to get those same features.

Time shifting has become the norm in a lot of households, but it's not the whole story. Along with that convenience and enjoyment come a lot of less obvious complications that the industry is still trying to figure out. Although DVR and other kinds of technology make television easier for us, the truth is that the industry is still trying to catch up.

To understand what the future might hold, we need to look at the history of television: how our viewing is measured, how our TV gets paid for and how these ratings are being affected by time shifting and other advances in technology.

Advertisement